Troubleshooting

1 Troubleshooting FAQ

Please check the questions below. If you cannot find the answer you looking for you can try asking on magasin’s community discussions forum.

1.1 How can I set the correct Kubernetes cluster in kubectl?

In case you run kubectl cluster-info and it is not pointing to the kubernetes cluster you want to use for magasin, you can use the following commands:

Get the list of different contexts available

kubectl config get-contextsWhich may output something like:

CURRENT NAME CLUSTER AUTHINFO NAMESPACE docker-desktop docker-desktop docker-desktop default * magasin docker-desktop docker-desktop default magasin-dev docker-desktop docker-desktop default minikube minikube minikube defaultUse the correct cluster (i.e. context). Currently, the context

magasinis selected, but to usemagasin-devyou can run this command:kubectl config use-context magasin-dev

1.2 Superset error Init:ImagePullBackOff during installation on ARM64 (Apple M1, M2…) architectures

If you see this error

and then when you see the status of the pods:

kubectl get pods --namespace magasin-supersetNAME READY STATUS RESTARTS AGE

superset-68865d55bc-rvxq5 0/1 Init:ImagePullBackOff 0 68m

superset-init-db-c9vcr 0/1 Init:ImagePullBackOff 0 68m

superset-postgresql-0 1/1 Running 0 68m

superset-redis-master-0 1/1 Running 0 68m

superset-worker-6c65786947-vcwvx 0/1 Init:ImagePullBackOff 0 68mThen, your operating system may be using an ARM64 architecture. There is an issue with superset that does not provide multi-architecture images. By default the x86 architecture (also known as amd64) is provided in the helm chart.

To fix this issue, create a file called superset.yaml and paste the following contents below

superset.yaml

image:

repository: apache/superset

tag: dfc614bdc3c8daaf21eb8a0d1259901399af7dd8-lean310-linux-arm64-3.9-slim-bookworm

pullPolicy: IfNotPresent

initImage:

repository: apache/superset

# ARM64 (M1, M2...)

tag: dfc614bdc3c8daaf21eb8a0d1259901399af7dd8-dockerize-linux-arm64-3.9-slim-bookworm

pullPolicy: IfNotPresentThen, manually install superset by running the command:

helm upgrade superset magasin/superset --namespace magasin-superset --create-namespace -f superset.yaml -i 1.3 Explore issues within the installation

To check if all the helm charts were properly installed, run this command

helm list --all-namespaces It may output something like

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

dagster magasin-dagster 1 2024-01-26 08:00:11.343903 +0300 EAT deployed dagster-1.6.0 1.6.0

daskhub magasin-daskhub 1 2024-01-26 07:56:59.908074 +0300 EAT deployed daskhub-2024.1.0 jh3.2.1-dg2023.9.0

drill magasin-drill 1 2024-01-26 07:56:50.414646 +0300 EAT deployed drill-0.6.1 1.21.1-3.9.1

operator magasin-operator 1 2024-01-26 08:05:29.432176 +0300 EAT deployed operator-5.0.11 v5.0.11

superset magasin-superset 1 2024-01-26 08:52:53.146091 +0300 EAT failed superset-0.10.15 3.0.1

tenant magasin-tenant 1 2024-01-26 08:05:35.925144 +0300 EAT deployed tenant-5.0.11 v5.0.11 In our case superset which was installed in magasin-superset namespace failed.

We can gather more information by getting the list of pods (containers) of superset’s namespace

kubectl get pods --namespace magasin-supersetNAME READY STATUS RESTARTS AGE

superset-68865d55bc-rvxq5 0/1 Init:ImagePullBackOff 0 68m

superset-init-db-c9vcr 0/1 Init:ImagePullBackOff 0 68m

superset-postgresql-0 1/1 Running 0 68m

superset-redis-master-0 1/1 Running 0 68m

superset-worker-6c65786947-vcwvx 0/1 Init:ImagePullBackOff 0 68mLastly, we can get details of one of the pods that did not successfully launch using:

kubectl describe pod superset-68865d55bc-rvxq5 --namespace magasin-supersetEvents:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal BackOff 2m38s (x276 over 66m) kubelet Back-off pulling image "apache/superset:dockerize"In this case it seems there is an error getting the image. One option is to try to delete the pod. Kubernetes will create a new one

kubectl delete pod superset-68865d55bc-rvxq5 --namespace magasin-supersetThe new one will have a different name. So we need to run again kubectl get pods --namespace magasin-superset.

If this does not work, an alternative is to try to reinstall the helm chart manually. First uninstall the current chart as below:

helm uninstall superset --namespace magasin-superset

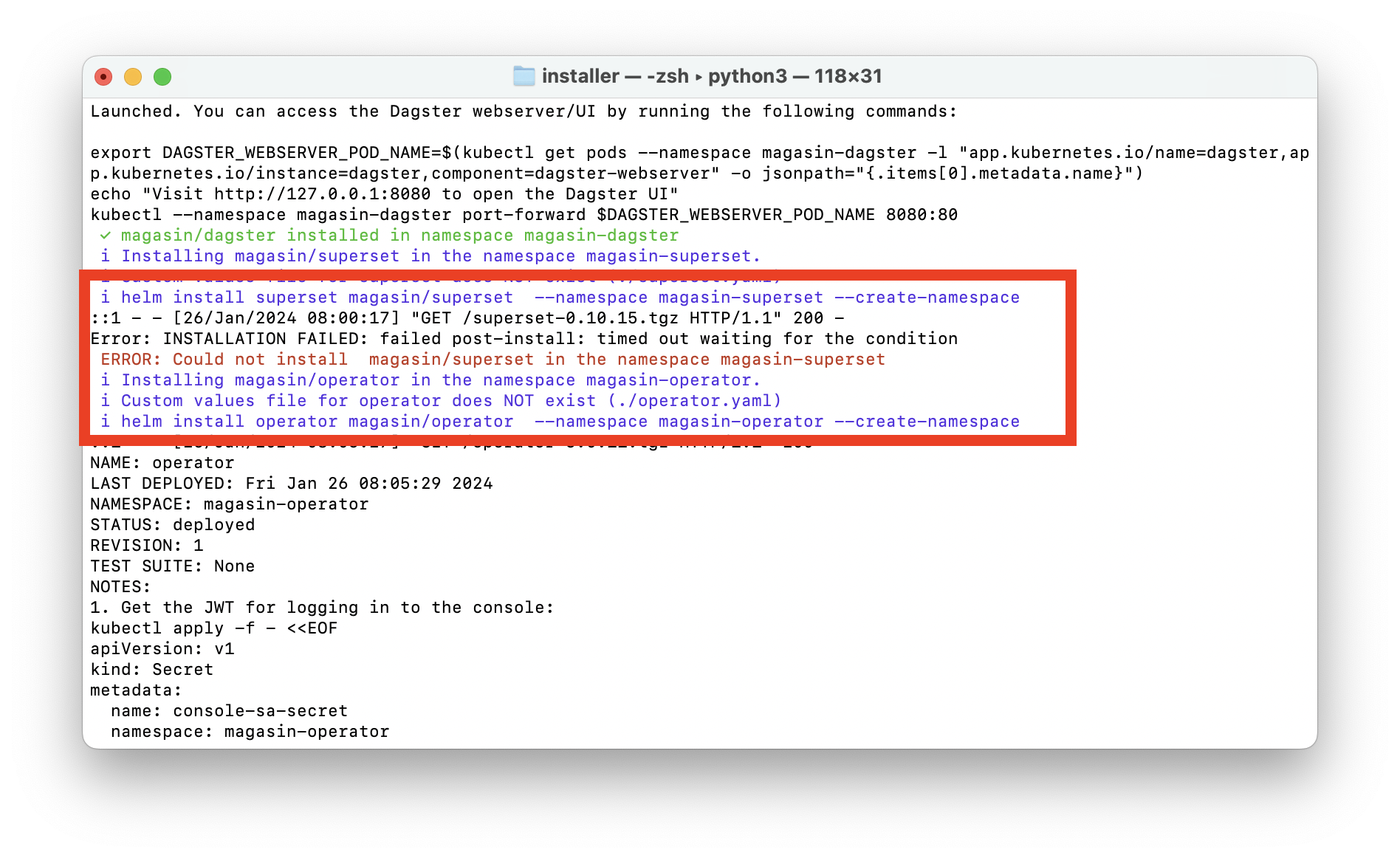

release "superset" uninstalledThen install manually the magasin component. We will use the same command that was used by the installer (which you can see in the screenshot with the error)

helm install superset magasin/superset --namespace magasin-superset If this does not work, you may try to find similar issues within the magasin discussion forum and/or file an issue.

1.4 After installation a container is not in Complete or Running status.

After a few minutes the installation of all the containers (pods) in the magasin-* namespaces should be in Complete or Running status. If there is any issue these may have another status.

You can check the status by running

kubectl get pods --all-namespacesLet’s see an example:

NAMESPACE NAME READY STATUS RESTARTS AGE

...

...

magasin-drill drillbit-0 0/1 Running 15 (102s ago) 102m

magasin-drill drillbit-1 0/1 Pending 0 102m

magasin-drill zk-0 1/1 Running 0 102mIn the case above we see that the drillbit-* are having issues. One is Running but had many restarts and the other is in Pending status. To inspect what’s going on you can run

kubectl describe pod drillbit-1 --namespace magasin-drillName: drillbit-0

Namespace: magasin-drill

Priority: 0

Service Account: drill-sa

Node: docker-desktop/192.168.65.3

Start Time: Fri, 12 Jan 2024 15:50:47 +0300

Labels: app=drill-app

apps.kubernetes.io/pod-index=0

controller-revision-hash=drillbit-b668897d5

statefulset.kubernetes.io/pod-name=drillbit-0

Annotations: <none>

Status: Running

IP: 10.1.0.106

IPs:

IP: 10.1.0.106

Controlled By: StatefulSet/drillbit

...

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 41m (x3 over 54m) kubelet (combined from similar events): Successfully pulled image "merlos/drill:1.21.1-deb" in 28.915s (28.915s including waiting)

Warning Unhealthy 26m (x135 over 99m) kubelet Readiness probe failed: Running drill-env.sh...

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

User=drill

/opt/drill/drillbit.pid file is present but drillbit is not running.

[WARN] Only DRILLBIT_MAX_PROC_MEM is defined. Auto-configuring for Heap & Direct memory

[INFO] Attempting to start up Drill with the following settings

DRILL_HEAP=1G

DRILL_MAX_DIRECT_MEMORY=3G

DRILLBIT_CODE_CACHE_SIZE=768m

[WARN] Total Memory Allocation for Drillbit (4GB) exceeds available free memory (1GB)

[WARN] Drillbit will start up, but can potentially crash due to oversubscribing of system memory.

Warning Unhealthy 70s (x131 over 99m) kubelet Liveness probe failed: Running drill-env.sh...

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

User=drill

/opt/drill/drillbit.pid file is present but drillbit is not running.

[WARN] Only DRILLBIT_MAX_PROC_MEM is defined. Auto-configuring for Heap & Direct memory

[INFO] Attempting to start up Drill with the following settings

DRILL_HEAP=1G

DRILL_MAX_DIRECT_MEMORY=3G

DRILLBIT_CODE_CACHE_SIZE=768m

[WARN] Total Memory Allocation for Drillbit (4GB) exceeds available free memory (1GB)

[WARN] Drillbit will start up, but can potentially crash due to oversubscribing of system memory.As we can see, there is an issue resulting from lack of sufficient memory. In this case we need to run the pod in a cluster with nodes having more RAM.